Social and Affective Machine Learning

Abstract

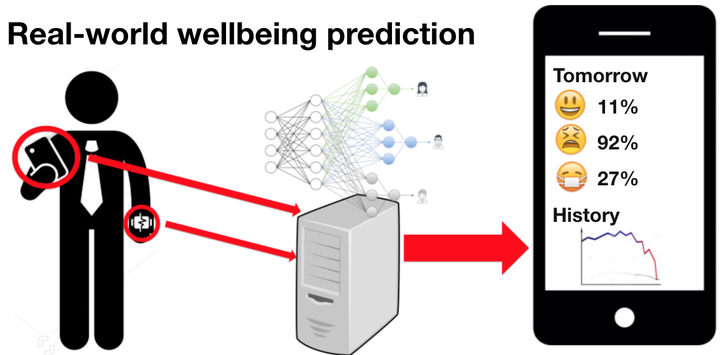

Social learning is a crucial component of human intelligence, allowing us to rapidly adapt to new scenarios, learn new tasks, and communicate knowledge that can be built on by others. This dissertation argues that the ability of artificial intelligence to learn, adapt, and generalize to new environments can be enhanced by mechanisms that allow for social learning. I propose several novel deep- and reinforcement-learning methods that improve the social and affective capabilities of artificial intelligence (AI), through social learning both from humans and from other AI agents. First, I show how AI agents can learn from the causal influence of their actions on other agents, leading to enhanced coordination and communication in multi-agent reinforcement learning. Second, I investigate learning socially from humans, using non-verbal and implicit affective signals such as facial expressions and sentiment. This ability to optimize for human satisfaction through sensing implicit social cues can enhance human-AI interaction, and guide AI systems to take actions aligned with human preferences. Learning from human interaction with reinforcement learning, however, may require dealing with sparse, off-policy data, without the ability to explore online in the environment – a situation that is inherent to safety-critical, real-world systems that must be tested before being deployed. I present several techniques that enable learning effectively in this challenging setting. Experiments deploying these models to interact with humans reveal that learning from implicit, affective signals is more effective than relying on humans to provide manual labels of their preferences, a task that is cumbersome and time-consuming. However, learning from humans’ affective cues requires recognizing them first. In the third part of this thesis, I present several machine learning methods for automatically interpreting human data and recognizing affective and social signals such as stress, happiness, and conversational rapport. I show that personalizing such models using multi-task learning achieves large performance gains in predicting highly individualistic outcomes like human happiness. Together, these techniques create a framework for building socially and emotionally intelligent AI agents that can flexibly learn from each other and from humans.